AI code generators promised to free data engineers from tedious work. But if you’ve ever tried to use them in real workflows, you know the disappointment - they produce SQL that looks right but works wrong. Instead of saving time, you end up spending hours reviewing AI’s output, debugging subtle errors, and praying nothing else was accidentally broken.

Why? Because AI doesn’t understand your data, and until it does you won’t be able to fully delegate tasks to it.

The daily grind of a data engineer

If you’ve been in the role, you know the drill:

An upstream schema changes, and suddenly your pipelines are broken.

Finance decides revenue should be calculated differently in one region, so you’re adding yet another exception to the model.

You spend days trying to make sense of a new data source, just to map it into the next layer.

Data engineers get buried in small fixes and tickets, they spend 40% to 70% of their time firefighting - patching pipelines, fixing mismatches, repeating the same tedious work.

This isn’t the exception. It’s the daily reality.

The promise of AI code generators

So when AI-powered code tools showed up - Cursor, text-to-SQL assistants, “AI copilots” - it felt like a dream.

“Finally. Something to handle the boring stuff so I can focus on real business problems… or just pack up early and hit the beach.”

For software engineers, these tools really have been game-changing. For data engineers, they’re nice - helpful, sure - but they haven’t changed the game.

Not yet.

Looks right, works wrong

But here’s the problem: AI doesn’t know your data.

And if there’s one thing data engineers do know, it’s the data. Without that context, how can we expect AI to solve the work for us?

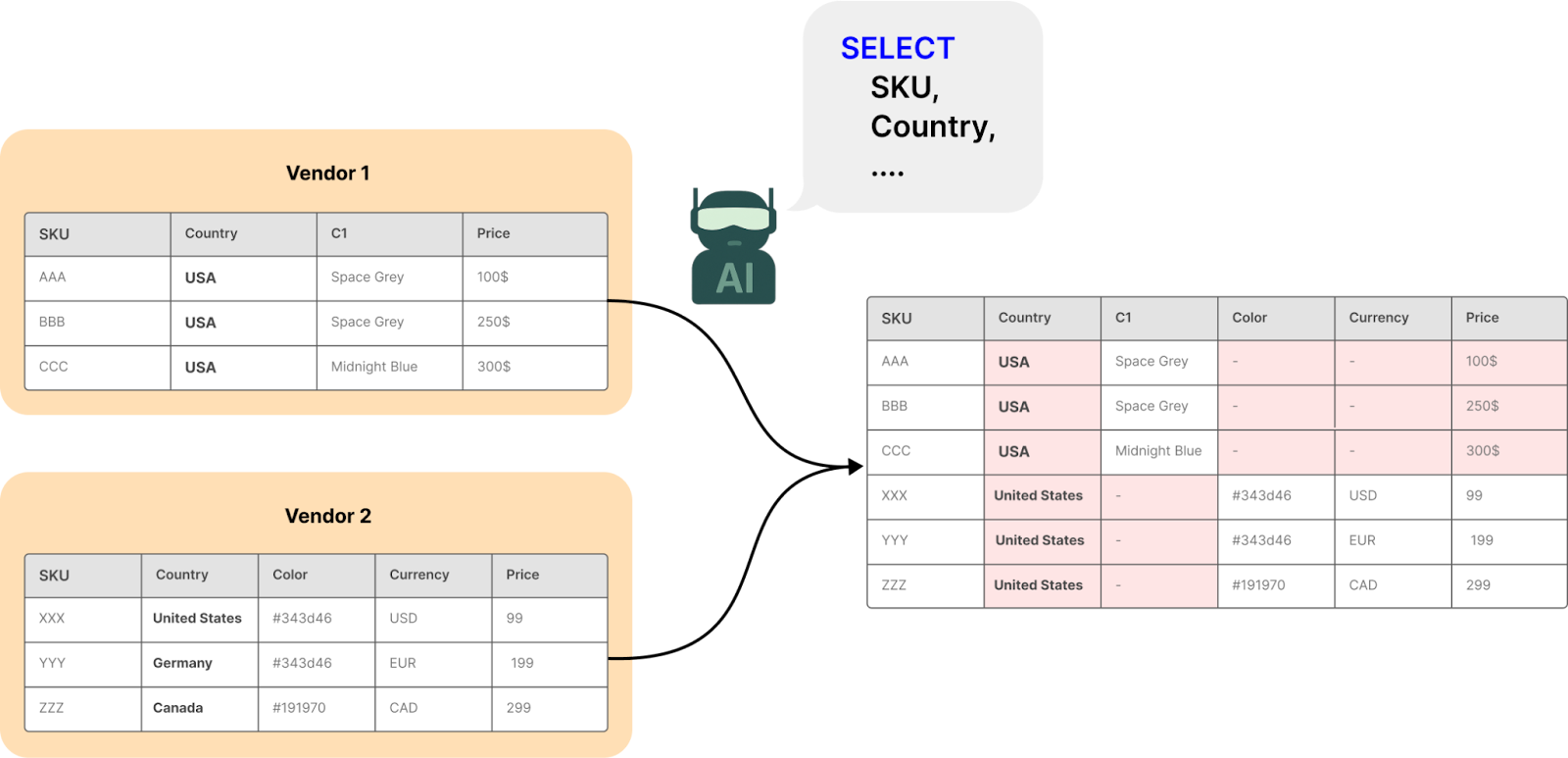

Take a product catalog as an example. You’re pulling in feeds from five vendors. The job is to unify them into one clean catalog. On paper, it looks simple - just UNION ALL and dedupe by SKU. In reality, the data tells a very different story:

The same product appears under different keys: IP14PM-256 vs. iPhone 14 Pro Max 256GB.

Units don’t match: one feed uses inches, another uses centimeters.

Prices mix USD, EUR, and GBP—sometimes with no currency flag at all.

Colors are inconsistent: "Space Gray", "Space Grey", "#333333".

And of course there’s the column: "C1" with values 1, 2, 3, 4. What the *** its mean

These problems are visible in the data itself, and if the AI doesn’t catch them, it will happily generate code that looks correct yet leaves you with a catalog full of duplicates, broken filters, and apples-to-oranges comparisons.

Instead of saving time, you end up sinking hours reviewing AI’s work, trying to figure out if it accidentally broke something else. What looked like a shortcut becomes another source of technical debt.

The need for data context

The answer isn’t just in the repo.

Data engineering isn’t software engineering with different libraries — it’s its own discipline.

Before writing a single line of code, a data engineer goes through a process that is as much about understanding context as it is about building pipelines. Take a common case: onboarding a new data set, such as events from a mobile platform, in addition to existing web events. Before writing a single line of code, the data engineer:

Identify the right data. How do event streams get to the platform, and how should they be pulled efficiently?

Understand the data. Once identified, the data must be profiled and interpreted. Do mobile events use the same naming conventions and semantics as web events? How should differences in session IDs, device identifiers, or attribution logic be reconciled so that a “session” or “conversion” means the same thing?

Plan the downstream effects. Writing an ETL is straightforward, but ensuring it delivers the intended outcomes is far more complex. Are there already transformations in the pipeline that normalize some of these events? What dashboards, models, or metrics depend on these events, and how will they be impacted?

This process relies on context: semantics, lineage, dependencies, and rules. Data engineers gather it by analyzing the data, reading documentation, and talking to producers, consumers, and peers. For them, it’s implicit, but for AI, it must be made explicit.

If AI is going to automate data engineering reliably, it must follow the same process. That means formalizing this hidden knowledge into something concrete: Data Context.

What is this data context?

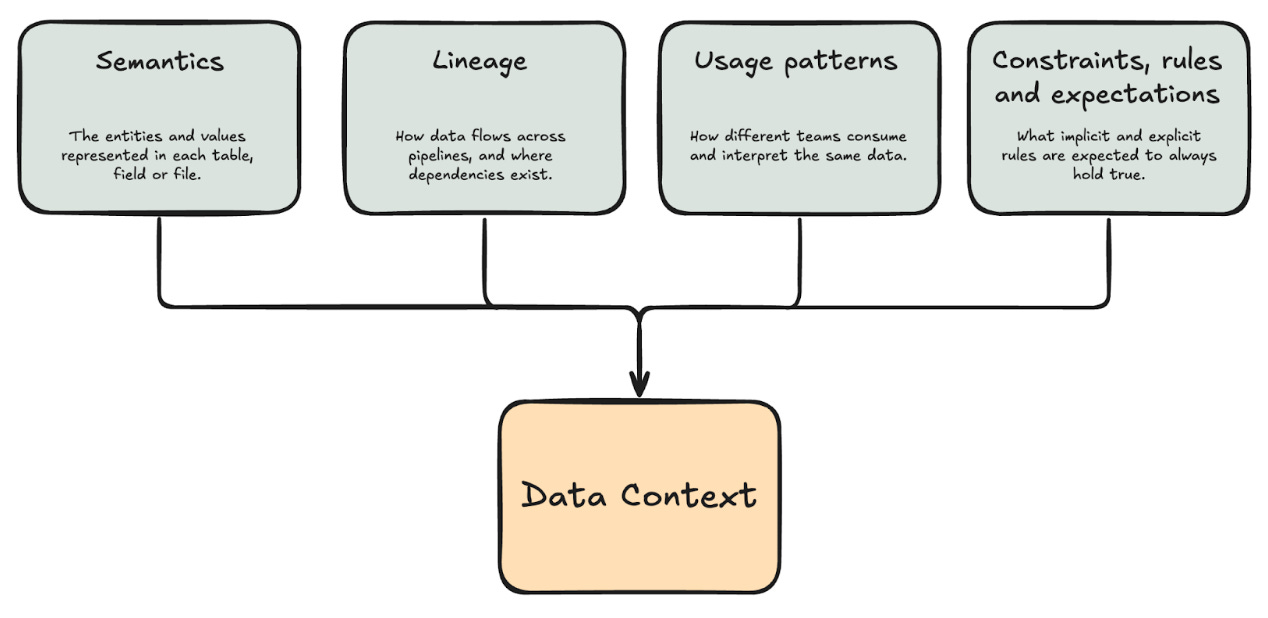

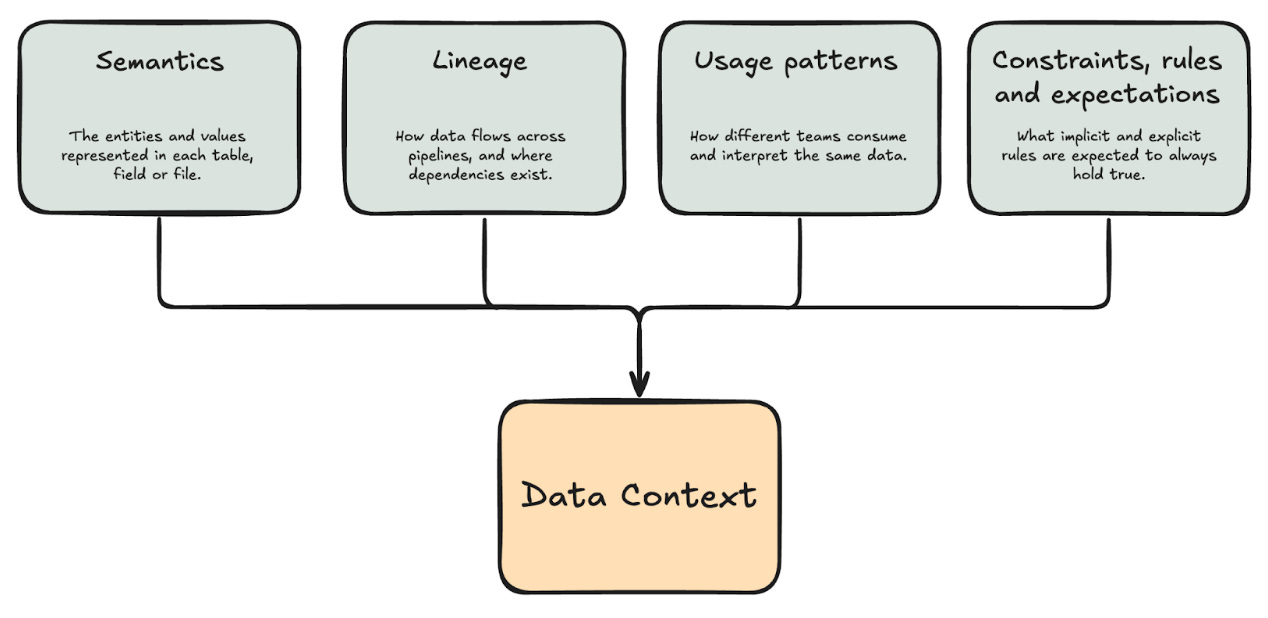

Think of it as the structured knowledge a data engineer builds up in order to make changes safely. It’s the accumulated understanding of what the data means, how it moves, and what rules must always hold true.

It formalizes the following:

Semantics - The entities and values represented in each table, field or file.

Lineage- How data flows across pipelines, and where dependencies exist.

Usage patterns - How different teams consume and interpret the same data.

Constraints and rules -What implicit and explicit rules are expected to always hold true.

Returning to the mobile events example, context could be automatically inferred by:

Profiling the data and mapping attributes to semantic entities such as user, session, device, and location.

Automatically analyzing the lineage, transformation, and usage patterns to understand how each of these semantic entities is created and connected. For example, logically mapping the columns device_os and device_name in the device entity while holding the semantics of OS and Product Name, respectively, as the columns.

This analysis also helps understand where and how metrics are being calculated as part of the pipeline.Analysis of ETL tool configuration to understand how data is ingested into the platform and at what schedules.

Scanning source systems and code to understand how data is being produced, where it is stored, and if there are any limitations.

Notice that this context can be collected independently from the AI reasoning model to allow for a secure architecture where sensitive data is not wrongly sent to an external LLM provider.

With this context provided to an AI reasoning model, it can then accurately:

Identify the right data by using the context to look for mobile events and understand where they are stored and how they should be ingested into the data platform.

Understand the data to correctly map the attributes of the mobile events to the existing web events while highlighting differences in semantics and names that need to be taken into consideration.

Plan the downstream effects by correctly identifying where in the data lineage the two datasets should be merged in order to correctly apply the relevant deduplication and normalization logic. It can then also propose to add a “source” attribute (mobile vs. web) so dashboards and models can continue calculating unified metrics while still allowing platform-specific breakdowns.

With this context, AI doesn’t just generate code that looks right - it understands how to ingest the new events, how fields should be mapped, and what changes are safe. Instead of trial-and-error scripts, it can leave the data engineer a clean, reliable PR that only needs review and approval.

Once this context is inferred, AI gains eyes on the data - semantics, lineage, and usage - and can reason like a data engineer before suggesting a change. By capturing and formalizing these signals, Data Context turns scattered, tribal knowledge into an explicit, machine-usable model.

Of course, code generation for pipelines is not the end of the story. Context-aware AI also needs to be used to validate the changes and ensure their semantics and reliability. But context-aware AI validation is a big enough topic for a blog of its own.

Where we’re headed

Right now, AI for data engineering feels like autocomplete with a fancy title. It spits out SQL, glues together a pipeline, maybe even runs a test or two. But it doesn’t think like a data engineer.

Imagine the shift once AI has context. Instead of guessing at joins or hoping it picked the right column, it understands your lineage, your semantics, your rules. It knows that “mobile session” and “web session” aren’t the same thing - and it adjusts the pipeline accordingly.

That’s when AI stops being a code toy and starts acting like a teammate. The kind who doesn’t just write scripts, but also helps you find the right data table, checks compliance boxes without drama, and answers the endless “quick questions” from business teams without pulling you out of deep work.

It won’t replace data engineers - but it will change the job. Less time firefighting broken dashboards, more time shaping how data actually drives the business forward.

That’s the future: Data Context turning AI from a code generator into a dependable teammate, so engineers can stop fixing the past and start building the future.

Thank you

Thanks to the brilliant colleagues who collaborated with me on this piece. This blog is the outcome of our joint discussions that truly refined and sharpened it: Omri Lifshitz, Dylan Anderson, Xinran Waibel, Malcolm Hawker, Slawomir Tulski, Bethany Lyons, Tehila Cohen, Oded Geron, Yordan Ivanov, and Shachar Meir.